Tag Archives: MDM

Practical artificial intelligence and it’s application to traditional business intelligence

There are many books and photos that talk about the so-called revolution of artificial intelligence or AI I appreciate their enthusiasm however, I don’t think that is really being recognized that much of what they have invented i can be used in reality to greatly, reduce the time and effort to creat the kind of things we’ve had a creat for decades And they are essential to the creation of AI, which is predicated on learning and contact

One of the things that we will address is, there is a proclivity to use much language much of it is not very semantic. Today folks are describing architecture that already have descriptions. We have a mix of marketing terms and creative terms that mean the same thing, but they use words from the past. It’s causing confusion.

Just one example, maybe the term dimensionis one thing it meant to me thing 10 years ago come today it’s being used in a different context, in AI is it’s dimension or parameter or a feature There are many people of many years in a IT language is important it’s semantic

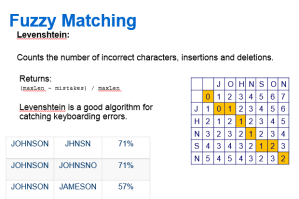

Another is the term similarity . It is used completely different in AI versus traditional, fuzzy matching. true the concept is the same but the Technical use Is different

There is no doubt of the benefit of what’s been created through the use of neural networks and transformers tthat hey can have tremendous positive impact on delivering business intelligence with the aid of artificial intelligence, machine, learning, and Deep Learning subsequently

I have been deeply involved in business intelligence, data quality, data profiling, and MDM and Data Governance for several decades.

I would like to take you on a journey and be able to help you exploit all these capabilities today and yesterday we are experience in the evolution of what we’re doing it is not a revolution. It is an evolution. if anything I hope to help achieve a basic understanding and terminalnology used in information architecture and various techniques that we have that will help , frankly nobody has a corner on the best approach it has all been done before at the logical level I want to be a part of helping us leverage, reuse, and apply what we were doing for decades, to what is now being introduced in the last several years you have to judge among the three goals, cheaper faster, better or we can guarantee is cheaper and faster. It’s up to you to make it better not necessarily the technology.

More to come . . .p

AI LLM New Project Roles including Prompt Engineering and Role AI Scientist and integrated data and information

I’d like to offer some advice on transitioning skills and knowledge skills and knowledge who worked hard to retainto include some of the new AI and NLM developments it’s actually less impactful, and better than you may think

Well, I dressed up in a little bit for now let’s talk about prompt engineers You most likely have currenb SME’s or expert on your current data and requirements

First, do you notice I used the term AI scientist Instead of data scientist , A data scientist Currently is actually a AI model scientist and they will help you apply. We are concerned here with how a lot of folks have opinions heuristic no not necessarily fact-based already we are going to suggest some techniques, and provide some mentoring to explore this important factor in AI is proper training we specialize in providing techniques and mentoring in separate information which is Formulated and an opinion and facts or Data, which, cannot change

There is a series of steps involved in preparing for the use of Data inAI and Chat in the form of LLM models. This is not much different and you may have most of the information already gathered in order to properly design the requirements for your model we would collect the phone.it is important to realize the steps are critical, for you have confidence in your models putput which will be your result of integrating, your Word documents, your Presentations,, spreadsheets, and, of course your actual data.

We wiKeaton, Billy, and modeling of information words versus modeling and requirements for data preparation . There is a difference that is extremely important and in line with what you’ve been doing.I know that Data preparation is not glamorous, but in my 20+ years you’ll get nowhere without proper data preparation you can’t AI it you can’t impute it you need to discuss requirements with people and write them down and then execute it The AI will make the legwork, faster. but in the end you’ll have to review it otherwiseit otherwise you may end up needlessly retracing your steps based on Improper preparation I know that Data preparation is not glamorous, but in my 20+ years you’ll get nowhere without proper data preparation you can AI it you can’t imputed you need to discuss requirements with people and write them down and then execute a I will do it. Faster time is the legwork, but in the end you’ll have to review with Stuck you may end up needlessly retracing your steps based on.I know that Data preparation is not glamorous, but in my 20+ years you’ll get nowhere without proper data preparation you can AI it you can’t imputed you need to discuss requirements with people and write them down and then execute a I will do it. Faster time is the legwork, but in the end you’ll have to review with Stuck you may end up needlessly retracing your steps based on. improper preparation. This can be at Floydd by phone, the proper steps.

1. Word document

2. Presentations

3. Spreadsheets

4.Data reports

5. Data quality report for AI preparation

6.Internet

7.Other Sources (Network,Internet or Local)

We have suggested tools/techniques/open source.and suggestions for each of these. Don’t let that bother you, however, is important with today’s capabilities in AI integrate your words your thoughts, your abstraction, and your actual data together in order for you. They’re trustworthy results from your AI.

We will be providing a separate post on each of these and then finally how they come together our point is that the what you’ve been doing to understand and form requires for tradition BI can be reutilized and extend it for AI

Evolving Data Into Information through Lineage

Information lineage through data DNA

Ira Warren Whiteside

ORIGINS

COMMON SENSE

We in IT have complicated and diluted the concept and process of analyzing data and business metrics incredibly in the last few decades. We seem to be focusing on the word data.

“There is a subtle difference between data and information.”

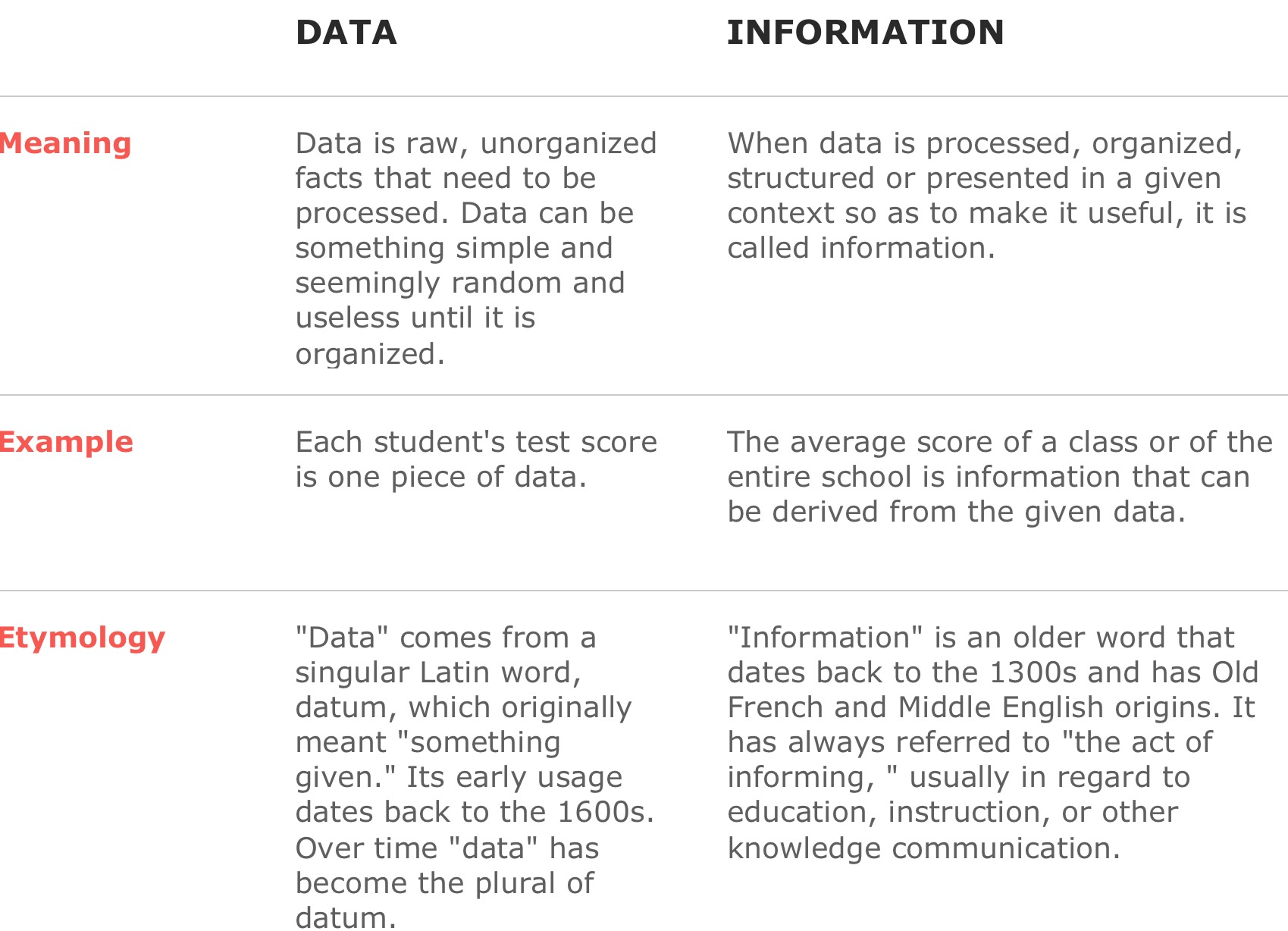

Information vs data

There is a subtle difference between data and information. Data are the facts or details from which information is derived. Individual pieces of data are rarely useful alone. For data to become information, data needs to be put into context.

Examples of Data and Information

The history of temperature readings all over the world for the past 100 years is data.

If this data is organized and analyzed to find that global temperature is rising, then that is information.

The number of visitors to a website by country is an example of data.

Finding out that traffic from the U.S. is increasing while that from Australia is decreasing is meaningful information.

Often data is required to back up a claim or conclusion (information) derived or deduced from it.

For example, before a drug is approved by the FDA, the manufacturer must conduct clinical trials and present a lot of data to demonstrate that the drug is safe.

“Misleading” Data

Because data needs to be interpreted and analyzed, it is quite possible — indeed, very probable — that it will be interpreted incorrectly. When this leads to erroneous conclusions, it is said that the data are misleading. Often this is the result of incomplete data or a lack of context.

For example, your investment in a mutual fund may be up by 5% and you may conclude that the fund managers are doing a great job. However, this could be misleading if the major stock market indices are up by 12%. In this case, the fund has underperformed the market significantly.

Comparison chart

Synthesis: the combining of the constituent elements of separate material or abstract entities into a single or unified entity ( opposed to analysis, ) the separating of any material or abstract entity into its constituent elements.

Synthesis

Data into Information is dominant in terms of data movement and replication, in essence data logistics.

Lineage is the key.

And with the simple action of linking data file metadata names to a businesses glossary or terms, Will result in deeply insightful and informative business insight and analysis.

“Analysis the separating of any material or abstract entity into its constituent elements”

In order for a business manager for analysis you need to be able to start the analysis at a understandable business terminology.

And then provide the manager with the ability to decompose or break apart the result.

They are three essential set of capabilities and associated techniquestechniques for analysis and lineage.

- Data profiling and domain analysis as well as fuzzy matching components available on my blog https://irawarrenwhiteside.com/2014/04/13/creating-a-metadata-mart-via-tsql/

- Meta-data driven creation of a meta-data mart through code generation techniques, implemented.

Underlining each of these capabilities is a set of refined, developed and proven code says for accomplishing these basic fundamental task.

One case study

I have been in this business over 45 years and I’d like to offer one example of the power of the concept of a meta-data mart and lineage as it regards to business insight.

A lineage, information and data story for BCBS

I was called on Thursday and told to attend a meeting on Friday between our companies leadership and the new Chief Analytics Officer. He was prototypical of the new IT a “new school” IT Director.

I had been introduced via LinkedIn to this director a week earlier as he had followed one of my blogs on metadata marts and lineage.

After a brief introduction, our leadership began to speak and the director immediately held up his hand he said “Please don’t say anything right now the profiling you provided me is at the kindergarten level and you are dishonest”

The project was a 20 week $900,000 effort and we were in week 10.

The company has desired to do a proof of concept and better understand the use of the informatics a tool DQ as well as direction for a data governance program.

To date what had been accomplished was in a cumulation of hours of effort in billing that has not resulted in any tangible deliverable.

The project had focused on the implementation and functionally of the popular vendor tool, canned data profiling results and not providing information to the business.

The director commented on my blog post and asked if we could achieve that at his company, I of course said yes.

Immediately I proposed we use the methodology that would allow us to focus on a tops down process of understanding critical business metrics and a bottoms up process of linking data to business terms.

My basic premise was that unless your deliverable from a data quality project can provide you business insight from the top down it is of little value. In essence you’ll spend $900,000 to tell a business executive they have dirty data. At which point he will say to you “so what’s new”.

The next step was to use the business terminology glossary that existed in informatica metadata manager and map those terms to source data columns and source systems, not an extremely difficult exercise. However this is the critical step in providing a business manager the understanding and context of data statistics.

The next step, was the crucial step in which we made a slight modification to the IDQ tool and allowed the storing of the profiling results into a meta-data mart and the association of a business dimension from the business glossary the reporting statistics.

We were able to populate my predefined metadata mart dimensional model by using the tool the company and already purchased.

Lastly by using a dimensional model we were able to allow the business to apply their current reporting tool.

Upon realizing the issues they faced in their business metrics, they accelerated the data governance program and canceled the data lake until a future date.

Now for the results.

Within six weeks we provided an executive dashboard based on a meta-data mart that allowed the business to reassess their plans involving governance and a data lake.

Here are some of the results of their ability to analyze their basic data statistics but mapped to their business terminology.

- 6000 in properly form SS cents

- 35,000 dependence of subscribers over 35 years old

- Thousands of charges to PPO plans out of the counties they were restricted to.

- There were mysterious double counts in patient eligibility counts, managers were now able to drill into those accounts by source system and find that a simple Syncsort utility had been used improperly and duplicated records.

J

Navigating the Information Value Chain

Data Governance

Navigating the Information Value Chain

Demystifying the path forward

The challenge for businesses is to seek answers to questions, they do this with Metrics (KPI’s) and know the relationships of the data, organized by logical categories(dimensions) that make up the result or answer to the question. This is what constitutes the Information Value Chain

Navigation

Let’s assume that you have a business problem, a business question that needs answers and you need to know the details of the data related to the business question.

Information Value Chain

Information Value Chain

• Business is based on Concepts.

• People thinks in terms of Concepts.

• Concepts come from Knowledge.

• Knowledge comes from Information.

• Information comes from Formulas.

• Formulas determine Information relationships based on quantities.

• Quantities come from Data.

• Data physically exist.

In today’s fast-paced high-tech business world this basic navigation (drill thru) business concept is fundamental and seems to be overlooked, in the zeal to embrace modern technology

In our quest to embrace fresh technological capabilities, a business must realize you can only truly discover new insights when you can validate them against your business model or your businesses Information Value Chain, that is currently creating your information or results.

Today data needs to be deciphered into information in order to apply formulas to determine relationships and validate concepts, in real time.

We are inundated with technical innovations and concepts it’s important to note that business is driving these changes not necessarily technology

Business is constantly striving for a better insights, better information and increased automation as well as the lower cost while doing these things several of these were examined and John Thuma’s‘ latest article

Historically though these changes were few and far between however innovation in hardware storage(technology) as well as software and compute innovations have led to a rapid unveiling of newer concepts as well as new technologies

Demystifying the path forward.

In this article we’re going to review the basic principles of information governance required for a business measure their performance. As well as explore some of the connections to some of these new technological concepts for lowering cost

To a large degree I think we’re going to find that why we do things has not changed significantly it’s just how, we now have different ways to do them.

It’s important while embracing new technology to keep in mind that some of the basic concepts, ideas, goals on how to properly structure and run a business have not changed even though many more insights and much more information and data is now available.

My point is in the implementing these technological advances could be worthless to the business and maybe even destructive, unless they are associated with a actual set of Business Information Goals(Measurements KPI’s) and they are linked directly with understandable Business deliverables.

And moreover prior to even considering or engaging a data scienkce or attempt data mining you should organize your datasets capturing the relationships and apply a “scoring” or “ranking” process and be able to relate them to your business information model or Information Value Chain, with the concept of quality applied real time.

The foundation for a business to navigate their Information Value Chain is an underlying Information Architecture. An Information Architecture typically, involves a model or concept of information that is used and applied to activities which require explicit details of complex information systems.

Subsequently a data management and databases are required, they form the foundation of your Information Value Chain, to bring this back to the Business Goal. Let’s take a quick look at the difference between relational database technology and graph technology as a part of emerging big data capabilities.

However, considering the timeframe for database technology evolution, has is introduced a cultural aspect of implementing new technology changes, basically resistance to change. Business that are running there current operations with technology and people form the 80s and 90s have a different perception of a solution then folks from the 2000s.

Therefore, in this case regarding a technical solution “perception is not reality”, awarement is. Business need to find ways to bridge the knowledge gap and increase awarement that simply embracing new technology will not fundamentally change the why a business is operates , however it will affect how.

Relational databases were introduced in 1970, and graph database technology was introduced in the mid to 2000

There are many topics included in the current Big Data concept to analyze, however the foundation is the Information Architecture, and the databases utilized to implement it.

There were some other advancements in database technology in between also however let’s focus on these two

History

1970

In a 1970s relational database, Based on mathematical Set theory, you could pre-define the relationship of tabular (tables) , implement them in a hardened structure, then query them by manually joining the tables thru physically naming attributes and gain much better insight than previous database technology however if you needed a new relationship it would require manual effort and then migration of old to new , In addition your answer it was only good as the hard coding query created

2020

In mid-2000’s the graph database was introduced , based on graph theory, that defines the relationships as tuples containing nodes and edges. Graphs represent things and relationships events describes connections between things, which makes it an ideal fit for a navigating relationship. Unlike conventional table-oriented databases, graph databases (for example Neo4J, Neptune) represent entities and relationships between them. New relationships can be discovered and added easily and without migration, basically much less manual effort.

Nodes and Edges

Graphs are made up of ‘nodes’ and ‘edges’. A node represents a ‘thing’ and an edge represents a connection between two ‘things’. The ‘thing’ in question might be a tangible object, such as an instance of an article, or a concept such as a subject area. A node can have properties (e.g. title, publication date). An edge can have a type, for example to indicate what kind of relationship the edge represents.

Takeaway.

The takeaway there are many spokes on the cultural wheel, in a business today, encompassing business acumen, technology acumen and information relationships and raw data knowledge and while they are all equally critical to success, the absolute critical step is that the logical business model defined as the Information Value Chain is maintained and enhanced.

It is a given that all business desire to lower cost and gain insight into information, it is imperative that a business maintain and improve their ability to provide accurate information that can be audited and traceable and navigate the Information Value Chain,Data Science can only be achieved after a business fully understand their existing Information Architecture and strive to maintain it.

Note as I stated above an Information Architecture is not your Enterprise Architecture. Information architecture is the structural design of shared information environments; the art and science of organizing and labelling websites, intranets, online communities and software to support usability and findability; and an emerging community of practice focused on bringing principles of design, architecture and information science to the digital landscape. Typically, it involves a model or concept of information that is used and applied to activities which require explicit details of complex information systems.

In essence, a business needs a Rosetta stone in order translate past, current and future results.

In future articles we’re going to explore and dive into how these new technologies can be utilized and more importantly how they relate to Business outcomes

Chocolate cake, MDM, data quality, machine learning and creating the information value chain’

The primary take away from this article will be that you don’t start your Machine Learning project, MDM , Data Quality or Analytical project with “data” analysis, you start with the end in mind, the business objective in mind. We don’t need to analyze data to know what it is, it’s like oil or water or sand or flour.

Unless we have a business purpose to use these things, we don’t need to analyze them to know what they are. Then because they are only ingredients to whatever we’re trying to make. And what makes them important is to what degree they are part of the recipe , how they are associated

Business Objective: Make Desert

Business Questions: The consensus is Chocolate Cake , how do we make it?

Business Metrics: Baked Chocolate Cake

Metric Decomposition: What are the ingredients and portions?

2/3 cup butter, softened

1-2/3 cups sugar

3 large eggs

2 cups all-purpose flour

2/3 cup baking cocoa

1-1/4 teaspoons baking soda

1 teaspoon salt

1-1/3 cups milk

Confectioners’ sugar or favorite frosting

So here is the point you don’t start to figure out what you’re going to have for dessert by analyzing the quality of the ingredients. It’s not important until you put them in the context of what you’re making and how they relate in essence, or how the ingredients are linked or they are chained together.

In relation to my example of desert and a chocolate cake, an example could be, that you only have one cup of sugar, the eggs could’ve set out on the counter all day, the flour could be coconut flour , etc. etc. you make your judgment on whether not to make the cake on the basis of analyzing all the ingredients in the context of what you want to, which is a chocolate cake made with possibly warm eggs, cocunut flour and only one cup of sugar.

Again belaboring this you don’t start you project by looking at a single entity column or piece of data, until you know what you’re going to use it for in the context of meeting your business objectives.

Applying this to the area of machine learning, data quality and/or MDM lets take an example as follows:

Business Objective: Determine Operating Income

Business Questions: How much do we make, what does it cost us.

Business. Metrics: Operating income = gross income – operating expenses – depreciation – amortization.

Metric Decomposition: What do I need to determine a Operating income?

Gross Income = Sales Amount from Sales Table, Product, Address

Operating Expense = Cost from Expense Table, Department, Vendor

Etc…

Dimensions to Analyze for quality.

Product

Address

Department

Vendor

You may think these are the ingredients for our chocolate cake in regards to business and operating income however we’re missing one key component, the portions or relationship, in business, this would mean the association,hierarchy or drill path that the business will follow when asking a question such as why is our operating income low?

For instance the CEO might first ask what area of the country are we making the least amount of money?

After that the CEO may ask well in that part of the country, what product is making the least amount of money and who manages it, what about the parts suppliers?

Product => Address => Department => Vendor

Product => Department => Vendor => Address

Many times these hierarchies, drill downs, associations or relationships are based on various legal transaction of related data elements the company requires either between their customers and or vendors.

The point here is we need to know the relationships , dependencies and associations that are required for each business legal transaction we’re going to have to build in order to link these elements directly to the metrics that are required for determining operating income, and subsequently answering questions about it.

No matter the project, whether we are preparing for developing a machine learning model, building an MDM application or providing an analytical application if we cannot provide these elements and their associations to a metric , we will not have answered the key business questions and will most likely fail.

The need to resolve the relationships is what drives the need for data quality which is really a way of understanding what you need to do to standardize your data. Because the only way to create the relationships is with standards and mappings between entities.

The key is mastering and linking relationships or associations required for answering business questions, it is certainly not just mastering “data” with out context.

We need MASTER DATA RELATIONSHIP MANAGEMENT

not

MASTER DATA MANAGEMENT.

So final thoughts are the key to making the chocolate cake is understanding the relationships and the relative importance of the data/ingredients to each other not the individual quality of each ingredient.

This also affects the workflow, Many inexperienced MDM Data architects do not realize that these associations form the basis for the fact tables in the analytical area. These associations will be the primary path(work flow) the data stewards will follow in performing maintenance , the stewards will be guided based on these associations to maintain the surrounding dimensions/master entities. Unfortunately instead some architects will focus on the technology and not the business. Virtually all MDM tools are model driven APIs and rely on these relationships(hierarchies) to generate work flow and maintenance screen generation. Many inexperienced architects focus on MVP(Minimal Viable Product), or technical short term deliverable and are quickly called to task due to the fact the incurred cost for the business is not lowered as well as the final product(Chocolate Cake) is delayed and will now cost more.

Unless the specifics of questionable quality in a specific entity or table or understood in the context of the greater business question and association it cannot be excluded are included.

An excellent resource for understanding this context can we found by following: John Owens

Final , final thoughts, there is an emphasis on creating the MVP(Minimal Viable Product) in projects today, my take is in the real world you need to deliver the chocolate cake, simply delivering the cake with no frosting will not do,in reality the client wants to “have their cake and eat it too”.

Note:

Operating Income is a synonym for earnings before interest and taxes (EBIT) and is also referred to as “operating profit” or “recurring profit.” Operating income is calculated as: Operating income = gross income – operating expenses – depreciation – amortization.

DNA and the concept of MDM(Master Data Management) have many similarities.

DNA and the concept of MDM( Master Data Management) or Modern ML/AI Data Preparation have many similarities.

“There is a subtle difference between data and information.”

We in IT have complicated and diluted the concept and process of analyzing data and business metrics incredibly in the last few decades. We seem to be focus in on the word data.

And if you consider the primary business objectives of MDM is provide consistent answers with standard business definitions and an understanding of the relationship or mappings of business outcomes to data elements.

DNA vs MDM or IT’s version of DNA.

The graphic I’ve chosen for this post symbolizes the linkage or lineage of human beings to DNA. What I’d like to do is relate the importance of lineage of data to examples of human how DNA communicate lineage and then discuss it in our IT as it relates to business functionality

Living Organisms are very complex as is a company and its data or information.

The genetic information of every living organism is stored inside these nucleic the basic data.

There are two types of nucleic acids(NA) namely:

DNA– Defines Traits, Characteristics

RNA – Communication, transfers information and synthesis.

Lets examine them.

DNA- Defines Traits, Characteristics

DNA-Deoxyribonucleic acid – In most living organisms (except for viruses), genetic information is stored in the form of DNA.

RNA – Communication, transfers information and synthesis

.

RNA – can move around in the cells of living organisms and serves as a genetic messenger, passing the information stored in the cell’s DNA from the nucleus to other parts of the cell for protein synthesis.

So here goes this is a bit of a stretch but if you consider DNA is the “Data” and a person the “Information” is created from the communication through the RNA.

To continue the analogy the DNA or chromosomes in and by themselves are out of context. It’s only once they been passed from one person to the next driven by RNA and result in a human being in that they become contextually realized as a human.

Again if we break down human DNA and inspected it, we can tell many things origin, or ancestory , traits of the person possible, diseases of the person, but it needs to be processed for us to understand the actual person.

So my point in relation to various approaches to traditional MDM or Master Data Management yis that this(DNA) is how life is created, it’s science, it’s not a methodology or product in approach a vendor guess work it’s real and the main point is “lineage” is the key

“There is a subtle difference between data and information. Data are the facts or details from which is derived. Individual pieces of data are rarely useful alone. For data to become information, data needs to be put into context.”

Examples of Data and Information as it relates to MDM

The history of temperature readings all over the world for the past 100 years is data.

If this data is organized and analyzed to find that global temperature is rising, then that is information.

- The number of visitors to a website by country is an example of data.

- Finding out that traffic from the U.S. is increasing while that from Australia is decreasing is meaningful information.

- Often data is required to back up a claim or conclusion (information) derived or deduced from it.

- For example, before a drug is approved by the FDA, the manufacturer must conduct clinical trials and present a lot of data to demonstrate that the drug is safe.

“Mislesading” Data”

Data needs to be interpreted and analyzed, it is quite possible — indeed, very probable — that it will be interpreted incorrectly.

When this leads to erroneous conclusions, it is said that the data are misleading. Often this is the result of incomplete data or a lack of context.

For example, your investment in a mutual fund may be up by 5% and you may conclude that the fund managers are doing a great job. However, this could be misleading if the major stock market indices are up by 12%. In this case, the fund has underperformed the market significantly.

Comparison charts

“Synthesis: the combining of the constituent elements of separate material or abstract entities into a single or unified entity ( opposed to analysis, ) the separating of any material or abstract entity into its constituent elements.”

Synthesis in MDM

Communication, transfers information and synthesis, like RNA several companies are prevelant in terms of data movement and replication, in essence data logistics

Defines Traits, Characteristics like DNA many companies have developed and refined products and the techniques required for during these. They are data profiling , domain pattern profiling and record linkage, the basis of transforming data into information .

However lineage is the key, and without this to serve as a connection between data and information, in essence there is no information. And in this case the “information” is the business term from the business glossary.

The integration of data movement capability and the linking of data profiling capabilities can result in providing a business the capabilities answering business question with certitude through the transparency of lineage.

To translate this into “business terms” it’s very similar to providing an audit trail in that a business can ask this questions like what customers are the most profitable look at those customers and then drill into wood products what areas with those profits coming

What lineage accomplishes is to lay a trail of cookie crumbs for data movement but for your business questions, it’s simply makes sure that you can connect the dots as data gets moved and/or translated and/or standardize and or cleansed throughout your enterprise.

And with the simple action of linking data file metadata of files, columns , profiling results to a businesses glossary or business terms, will result in deeply insightful and informative business insight and analysis.

“Analysis the separating of any material or abstract entity into its constituent elements”

In order for a business manager for analyze you need to be able to start the analysis at a understandable business terminology level. And then provide the manager with the ability to decompose or understand lineage from a logical perspective.

There are three essential capabilities required for analysis and utilizing lineage to answer business questions via a meta-data mart and these are very similar to the pattern that exist in DNA.

1. Data profiling and domain(column) analysis as well as fuzzy matching processes that are available in many forms:

a. – Scan all the values within each column and provide statistics(counts) such as minimum value, maximin value, mode(most occuring) value, number of missing value etc…

b. Frequency or Column value Patterns – determine the counts of distinct values within a column and also identify the distinct pattern occurring for all values within a single column and associated counts ie(SSN = 123-45-6789 , SSN PATTERN = ‘999-99-9999’

c. Fuzzy Matching( similarity algorithms) – This capability enables the find the counts of duplicate or similar text values.

2. The results need to be stored is a “Metadata-mart” in order to see the patterns, results and associations providing lineage and retatingraw data to business terms and hierarchies.

3. Visualization and analysis capability to allow for analysis, drill down into data mart aggregated and statistical contents and associated business hierarchies and businessterminology

Underlying each of these analytical capabilities is a set of refined processes, developed and proven code for accomplishing these basic fundamental task.

In future post I will describe how to implement these capabilities with or without vendor products, from a logical perspective.

References:

http://www.diffen.com/difference/Data_vs_Information

Explaining the Path to Data Governance from the Ground Up!

We will explore a methodology for understanding and implementing Data Governance that relies on an ground up approach described by Michael Belcher from Gartner as using ETL and Data Warehouse techniques to build a metadata mart, in order to “boot strap” a Data Governance” effort.

We will discuss the difference between the communication required for data governance and the engineering approach to implementing the “precision” required for MDM, via Data Profiling.

With Data Governance can apply the age old management adage “You get what you inspect, not what you expect” Readers Digest.

We will describe how to implement a data quality dashboard in Excel and how it supports a Data Governance effort in terms of inspection.

Future videos will explore how to build metadata repository as well as the TSQL required to load and update the repository and the data model as well as column and table relationship analysis using the Domain profiling results.

MDS Versions, Flags and Subscriptions

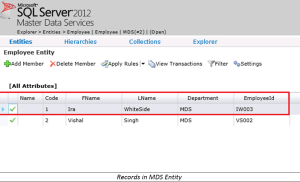

Recently I collaborated with Andrew Milner in a scenario in MDS SQL 2012 to provide the client the ability to with between multiple Versions and Flags via Subscription Views. Vishal Singh did an excellent job in implementing this scenario in MDS.

Versions and Flags

MDS Model defines the structure of the Data and Version defines the content of the data. In Master Data Services, multiple versions of the master data can be created within a model. Versions created in model can be in sequence fashion (one version after another) or in simultaneous fashion (multiple versions in parallel). This way we ensure we have a dedicated/consistent version of our master data for downstream consumption while at the same time having another version for adding/deleting/modifying members or for testing our model data, without compromising consistency in master data availability.

For example: We may want to have one version of the data marked as “current”, and another set marked as “proposed” using Version Flags. When the proposed version is confirmed as valid and clean, we can move that from proposed to current. So the version marked as “current” will always be the “Golden Copy of the Data”

When to Use Versions

Use versions to:

- Prevent users from making changes while all data validates successfully against business rules.

- Lock down a model for use by subscribing systems.

Version Flags

When a version is ready for users or for a subscribing system, we can set a flag to identify the version. We can move flag from version to version as required. Flags help user and downstream applications identify which version of a model to use.

Scenario:

Create Model and 3 Versions (one in Commit Status and other two in Open Status).

An initial version is created by default when a model is created. In order to create two other versions in Open status, commit default version and create two versions from the committed version. Name two versions as PROD and TEST and map it to two different Flags PROD and TEST respectively. Data inserted in two different versions are mutually exclusive meaning they are not related to each other. Data modified in one of the version will not be reflected in another version. Subscription views created based on Version will also have data related to that Version only. Flags help user and downstream applications identify which version of a model to use.

For example: Created a sample Model called “Employee” and created three versions VERSION_1 (committed status), MDS (open status) and SSIS (open status). In the same way created two flags MDS and SSIS mapped to MDS and SSIS versions respectively. Data present in different versions (MDS and SSIS) are not related and thus not in synch.

In Employee entity there is one employee which works in two different technologies MDS and SSIS as highlighted below. Employee (Employee ID: IW003) works in both SSIS and MDS technology but there is a difference in their name in different versions.

Records highlighted above are common in MDS and SSIS versions of Employee Entity with only difference in their Name (EmployeeID: IW003) . Subscription view created on the MDS (linked to MDS flag) and SSIS (linked to SSIS flag) versions will have different set of data. Please find below the data present in subscription-view:

Downstream application will fetch data based on the subscription views which they are consuming. If downstream application is consuming MDS version (mapped to MDS flag) will have different data and one consuming SSIS version (mapped to SSIS flag) will have different of data.

Architecture in MDS Acme

We have used below logic in MDS Acme:

In MDS Acme we have two different data sources Production and Test. Data coming from different data sources are getting inserted in the same model (Sample) and same entities. Two versions V1 and V2 are created pointing to Production and Test data sources. Versions V1 and V2 are mapped to Flags F1 and F2 respectively. Subscription views created are linked to Version flags. Downstream applications are consuming data based on the subscription view published. When a new Version needs to be created called V3 which is copy of version V2 below steps need to be done:

- Validate and commit old version V2 as in this example.

- Copy the old version and create a new version called V3 from V2

- Remove the flag F1 from the old version V2

- Map flag F1 to newly created version V3

As the subscription views are created based on the Version Flags and not on Version. So we don’t have to change the subscription views for the newly created version. Downstream application will now be able to fetch data from the new versions instead of old versions.

Informatica Cloud MDM for Salesforce (formerly Data Scout) Review

Tactically improving Data Quality and incrementally achieving Data Governance and metadata management is a natural path and MDM is the center of that strategy. See Gartner Group’s Applying Data Mart and Data Warehousing Concepts to Metadata Management

In Metadata Mart the Road to Data Governance or Guerilla Data Governance I outline this approach

I’ve just completed a Data Governance Assessment and review of Informatica CLOUD MDM(formerly Data Scout for Salesforce) with my colleague and excellent Solution Architect Baliji Kkarade . The client in this case is interested in implementing Informatica CLOUD MDM in Salesforce , as a tactical approach to improving Data Quality and incrementally improving Data Governance . I’d like to aknowledge the incredible insight I gained from Balaji Kharade in this effort.

In general and product is positioned to provide a transactional MDM within Salesforce. We will cover the steps for implementation and some back ground on Fuzzy Matching or de-duplication.

We will walk thru the steps for setting up the tool.

- Cloud MDM Settings

- Cloud MDM Profile

- Adding Cloud related Information to Page Layout

- Synchronization Settings

- Data Cleansing

- Fuzzy Matching and Segments

- External Data Sources

- Consolidation and Enrichment

- Limitations

This post assumes familiarity with the Saleforce architecture.

1. Cloud MDM Settings

- Cloud MDM master on/off switch is configured using this setting and other settings like extracting the legal form and domain , overriding Salesforce Account information using Master bean after Match and Merge in Cloud MDM, and Standardizing Country.

- In some cases, you may wish to turn off Cloud MDM after you have installed and configured it.

- For example, if you wish to bring in a new set of data without creating beans. To achieve this, you need to switch Cloud MDM off.

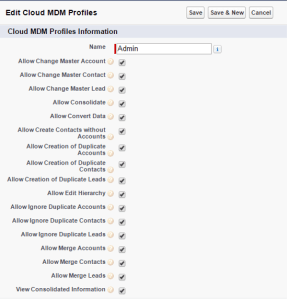

2. Cloud MDM Profile:

- When Cloud MDM is installed, a default profile is given to all users. In order for your user to get access to all the features of Cloud MDM, you must configure an admin or super user profile. When you implement Cloud MDM, you can use profiles to assign MDM functionality to Salesforce user profiles.

- Users can have Permissions to Create/Update/Merge/consolidate Account, Contact and Leads, Create/Ignore duplicate Account, Contact and Leads, View consolidated information and create/Edit Hierarchy information

3. Add Cloud Related Information to Page Layout:

Helps to add MDM related components like Consolidated view, Find duplicates, MDM related fields like Synchronize, Legal forms, ISO country ,duplicate Account section, Related beans and Master Beans etc .

4. Synchronization Settings:

- This setting helps in Synchronizing/Mapping the Salesforce Attributes to Cloud MDM stage Area.

- We can map Standard fields and 10 custom fields. These standard and custom fields help us in configuring segment settings and match strategy in cloud MDM.

- Sync job helps creating beans and Master beans in cloud MDM stage Area.

5. Data Cleansing:

Data cleansing ensures the data is in a consistent format. Consistent data improves the quality of reporting and also improves matching results and the accuracy of duplicate detection.

Legal Form :

Legal form normalization is the process of extracting the legal form from the company norm and populating the legal form field with normalized data.

For example, We can configure the legal form field to contain normalized data for business entity designations such as Limited, Ltd., and L.T.D. We can add legal forms to the list available already after profoiling our data set.

Domain Normalization :

We can enable Cloud MDM to populate the domain field with a domain extracted from the website field. Cloud MDM uses the domain field during fuzzy matching.

For example, if a user enters http://www.acme.com/products or www. acme.com in the website field, Cloud MDM can populate the domain field with acme.com. normalized domain ensures domain field consistency and improves match results.

6. Fuzzy Matching and Segments:

Segment :

The segment field in the master bean record contains a matching segment. The matching segment is a string of characters that Cloud MDM uses to filter records before it performs fuzzy matching.

To improve fuzzy match performance, Cloud MDM performs an exact match on the matching segments to eliminate records that are unlikely to match. Cloud MDM then performs fuzzy matching on the remaining records. This can be basically creating Categories and Groups in Advanced Fuzzy Matching or “Blocking Indexes” Record Linkage. This will be created for all the Accounts once the Sync between Salesforce and MDM is done. It is also generated for external beans.

Fuzzy matching :

Fuzzy matching can match strings that are not exactly the same but have similar characteristics and similar patterns.

One example of a Fuzzy Matching algorithm is LevenShtein, the original Fuzzy algorithm Levenshtein Distance or Edit Distinceinvented in 1965

Levenshtein:

Counts the number of incorrect characters, insertions and deletions.

Returns:

(maxLen – mistakes) / maxLen

Levenshtein is a good algorithm for catching keyboarding errors

Matching is a two step process that determines a match score between two records. First, Cloud MDM performs an exact match on the matching segments to exclude records that are unlikely to have matches. Then, Cloud MDM performs a fuzzy match on the remaining records to calculate a match score between pairs of records. If the match score of the two records achieves the match score threshold, Cloud MDM considers the two records a match.

7. External Data Source:

We have external data in a system, such as SAP or Oracle EBS. We wish to load this data directly into beans, so we can take some of the information (SIC Code, No of Employees.) from the SAP record, and retain some information (e.g. Company Name) from the Salesforce record.

This setting allows us to configure the external Data source and defining the trust/priority score i.e. which value will win over the other during Consolidation and Enrichment process.

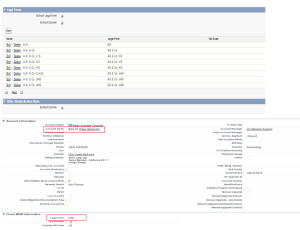

8. Consolidation and Enrichment.

Consolidation :

The consolidated view allows us to look at all beans associated with a master bean. In order to use this view, we must configure the fields that will display in the list of associated beans, as well as the account address information. This is done by configuring field sets.

Enrichment:

This setting allows us to over write the value from the Master bean to the Salesforce Org Account based on the trust/priority score provided during the configuration of the external Data source. We can use the override account option in cloud MDM settings to prevent the automatic override of the Salesforce Org Account accordingly.

9. Limitations.

There are two primary limitations:

- Custom Fields are limited to 10 and only 6 can be used in syncing.

- High volume matching from External Source is completed in a “Pre Match” process, which is basically accessing the “Master Bean” externally and developing ETL Process with another tool.