Category Archives: Awarement

Organic Data Quality vs Machine Data Quality

In reading a few recent blog post on centralized data quality vs. distributed data quality have provoked me to offer another point of view.

Organic distributed data quality provides todays awareness for all current source analysis and problem resolution and they are accomplished manually by individuals in most cases.

In many cases when management or leadership(Folks worried about their jobs) are presented with any type of organized machine based data quality results that can easily be viewed, understood and is permanently available, the usual result is to kill or discredit the messenger.

Automated “Data Quality Scoring” (Profiling + Metadata Mart) brings them from a general “awareness” to a realized state “awarement”.

Awarement is the established form of awareness. Once one has accomplished their sense of awareness they have come to terms with awarement.

It’s one thing to know that alcohol can get you drunk, it quite another to be aware that you are drunk when you are drunk.

Perception = Perception

Awarement = Reality

Departmentral DQ = Awareness

vs

Centralized DQ = Awarement

Share the love… of Data Quality– A distributed approach to Data Quality may result in better data outcomes

My Wife, My Family , My Stroke (Perception is Perception:Awarement is Reality)

Six years earlier the doctors had told my wife(Theresa) she had 18 months to live, she was diagnosed Stage 4 cancer. She is now is full remission. A women like her mother, she never drank and never smoked.

On June 3, 2014 I had a brain stem stroke(PONS)

I have always told my daughters “Men are idiots”, and apparently I have proven it, here is an example:

5:00 Monday evening my arm start twitching and doesn’t stop.

7:00 Monday arm still twitching, my wife(beautiful, wonderful, courageous and wise) Tessie, says “Let me take you to the hospital”, of course like any normal “man” I say “Nah it no big deal”

11:00 PM Monday. I take a conference call and notice my left arm and leg are a little numb, obviously I take an aspirin and go back to bed.

5:00 AM Tuesday I plan to catch flight , my wife intervenes and I stay home.

7:00 AM Tuesday I take another conference call, by now I am limping and can’t hold a cup of coffee in my left hand.

8:00 AM Tuesday My wife now says we are going to the hospital.

8:30 AM Tuesday in hospital I am informed I had a Pons (Brain Stem Stroke) I can now no longer move my left leg or arm and have pretty slurry speech. As a bonus the doctors tell me if I had come in within 5 hours of the first symptom , they may have been able to help, but now it too late.

10:00 PM Thursday My daughter Christina notices the nurses had taken off my bracelet and then had administered a second injection of Lantus(Long Acting Insulin) , unfortunately I didn’t focus on what she pointed out. I would regret not listening.

11:00 PM Thursday I pass out in a diabetic coma in front of my wife and daughter(Victoria), after frantic activity I end up in the ICU, back to square one.

Many illnesses happen in slow motion, this episode of unconsciousness(coma) came very suddenly, if it were not for Tessie’s calmness , control , faith in God and knowledge I am sure I would not be here.

The nurse were unsure what to do , apparently they had given me an extra dose of insulin and very slow to respond.

My wife Theresa(Tessie) with Victoria’s help literally had to keep me alert and practically drag me to the ICU over the nurses objections. You know its bad when the nurses are asking you wife for the “Power of Attorney”, which of course she had already given them, and they had lost it.

…..25 days later (Diabetics Coma, ICU, Rehabilitation, multiple brain scans , seizure scans, seizures, diarrhea) I am released on the condition I use a walker. Since then I have graduated to a cane,. My wife had stayed with me 25 days the entire time.

I am very lucky I am left with hemiparesis(weakness left side) and double vision(right side)

For 25 days …….. , 25 DAYS …….. my wife(Theresa) and my entire family never left my side(day of night):

Theresa(Wife, Soul Mate, My everything), Christina(Daughter #1, The Rock). Victoria(Daughter #2, Brainiac ),Julia(Grandaughter #1, Angel sent from heaven),Brandon(Son in Law),Jack (Grandson #1),Jacob(Grandson #2)

Tessie and our family at St Mary’s in earlier times.

As a man, husband, father and grandfather I have tried to be up to the tasks in providing for my family, sometimes meeting them many times falling short, in my eyes.

My wife led a family effort that as I write about it and remember it I am overwhelmed and tearful. I wish I could say I deserve this , but I can’t I was not good enough.

The reality is that we grow older we grow wiser, not through knowledge alone, but through experience.

Apparently Pavlov was “more right” then Plato(Allegory of the Cave). Perception is not reality, Awarement is reality and it come through pain.

This experience has given me the opportunity to eliminate any remnants of ego and permanently instilled a sense of humility. Countless time during my 25 day stay, my family(ALL OF THEM) helped my dress, eat , go to the bathroom(with diarrhea).

As I said one point I went into a coma , low insulin and needed to receive emergency treatment and go to the ICU, it was one many very traumatic experiences and my wife Tessie never faltered, kept her faith and strength and brought me back.

Epilogue: At one point I had to prove my cognitive skills were still intact, being that my wife and daughters have a small consulting company Actuality Business Intelligence we collaborated on creating an assessment and power point on how to improve the rehabilitation scheduling system and patient/therapist flow, from my perspective anything that made rehab like work was good.

To be clear my hospital room was family “Grand Central Station”

Theresa(Tessie),

This post is dedicated to you , I love you with all my heart and soul, forever and ever. God has truly blessed me with you as my soul mate and to receive your love and care.

Your eternally grateful husband – Ira

Ira Warren Whiteside

11/25/2014

Guerilla MDM via Microsoft MDS: Force Model Validation or TSQL Script to force set Validation Status ID ‘s

Using Metadata and code generation to programmatically set Validation Status ID’s for all leaf members in an MDS Entity.

Primarily this script relies on the MDS mdm.udpMemberValidationStatusUpdate procedure for a single member. Unfortunately I could not get the stored proc for multiple members(mdm.udpMembersValidationStatusUpdate) to work, so I created a script to rely on the single member version.( mdm.udpMemberValidationStatusUpdate). I could not resolve the “operand type clash” error.

The script requires the model name and entity name, to generate a TSQL statement to update each member id to the designated Validation status.

IF OBJECT_ID('tempdb..#MemberIdList') IS NOT NULL

DROP TABLE #MemberIdList

DECLARE @ModelName nVarchar(50) = ‘Supplier’

DECLARE @Model_id int

DECLARE @Version_ID int

DECLARE @Entity_ID int

DECLARE @Entity_Name nVarchar(50) = ‘Supplier’

DECLARE @Entity_Table nVarchar(50)

DECLARE @sql nVarchar(500)

DECLARE @sqlExec nVarchar(500) = ‘EXEC mdm.udpMemberValidationStatusUpdate ‘

DECLARE @ValidationStatus_ID int = 4

— Found the following information intable [mdm].[tblList]

–ListCode ListName Seq ListOption

–lstValidationStatus ValidationStatus 0 New, Awaiting Validation

–lstValidationStatus ValidationStatus 1 Validating

–lstValidationStatus ValidationStatus 4 Validation Failed

–lstValidationStatus ValidationStatus 3 Validation Succeeded

–lstValidationStatus ValidationStatus 2 Awaiting Revalidation

–lstValidationStatus ValidationStatus 5 Awaiting Dependent Member Revalidation

–MemberType_ID = 1 (Leaf Member)

DECLARE @MemberType_ID int = 1

–Get Version id for Model

SET @Version_ID = (SELECT MAX(ID)

FROM mdm.viw_SYSTEM_SCHEMA_VERSION

WHERE Model_Name = @ModelName)

print @Version_ID

–Get Model IDfor Model

SET @Model_ID = (SELECT Model_ID

FROM mdm.viw_SYSTEM_SCHEMA_VERSION

WHERE Model_Name = @ModelName)

print @Model_ID

–Get Entity ID for specific Entity

SET @Entity_ID =

(SELECT [ID]

FROM [mdm].[tblEntity]

where [Model_ID] = @Model_ID

and [Name]= @Entity_Name)

–Get Entity Table Namefor specific Entity

SET @Entity_Table =

(SELECT[EntityTable]

FROM [mdm].[tblEntity]

where [Model_ID] = @Model_ID

and [Name]= @Entity_Name)

print ‘Processing Following Model ‘ + convert(varchar,@ModelName)

print ‘Model ID = ‘ + convert(varchar,@Model_id)

print ‘Version ID = ‘ + convert(varchar,@Version_ID)

print ‘Entity = ID ‘ + convert(varchar,@Entity_ID)

print ‘Entity Name = ‘ + convert(varchar,@Entity_Name)

print ‘Entity Table Name ‘ + convert(varchar,@Entity_Table)

print ‘Validation Status ID being set to ‘ + convert(varchar,@ValidationStatus_ID) + ‘ for all members in ‘+ convert(varchar,@Entity_Name)

–Create local temp table to hold member ids to update

CREATE TABLE #MemberIdList

( id int,

Processed int)

–Generate SQL to populaet temp table

set @sql = N’INSERT INTO #MemberIdList select id, 0 from mdm.’ +convert(varchar,@Entity_Table)

–Generate SQL to display stored proc update

EXECUTE sp_executesql @sql

–Create Tabe Variable to hold SQL Commands and prepare for execute

Declare @Id int

DECLARE @MemberSQL TABLE

(

ID int,

mdssql nvarchar(500),

Processed int

)

While (Select Count(*) From #MemberIdList Where Processed = 0) > 0

Begin

Select Top 1 @Id = ID From #MemberIdList Where Processed = 0

set @sql = N’ mdm.udpMemberValidationStatusUpdate ‘ +convert(varchar,@Version_ID) +’,’ +convert(varchar, @Entity_ID) +’,’ +convert(varchar,@ID) +’,’ +convert(varchar, @MemberType_ID) +’,’ + convert(varchar,@ValidationStatus_ID)

print @sql

INSERT INTO @MemberSQL

Select @id , @sql, 0

Update #MemberIdList Set Processed = 1 Where ID = @Id

End

Declare @sqlsyntax nVarchar(500)

While (Select Count(*) From @MemberSQL Where Processed = 0) > 0

Begin

Select Top 1 @id = id From @MemberSQL Where Processed = 0

Select Top 1 @sqlsyntax = mdssql From @MemberSQL Where Processed = 0

print @sqlsyntax

— comment this line to not execute update Validation Status ID

EXECUTE sp_executesql @sqlsyntax

Update @MemberSQL Set Processed = 1 Where ID = @Id

End

Declare @Entity_TableSQL nVarchar(500)

set @Entity_TableSQL = ‘SELECT * FROM [mdm].’ + @Entity_Table

print @Entity_TableSQL

exec sp_executesql @Entity_TableSQL

Here are several links I referenced:

The code for forcing the Validation of the Model is here. Jeremey Kashel’s Blog

Microsoft SQL Server 2012 Master Data Services 2/E Tyler Graham

I am sure this can be improved, please contact me with questions or suggestions.

-Ira Warren Whiteside

Agile MDM Data Modeling and Requirements incorporating a Metadata Mart.(Guerilla MDM)

Recently an acquaintance of mine asked about my thoughts on the approach for creating a MDM data model and the requirements artifacts for loading MDM.

I believe with this client and with most clients that the first thing that we have to help them understand is that they have to take an organic and evolving a.k.a. an “agile” approach utilizing Extreme Scoping in developing and fleshing out their MDM data model as well as the requirements.

High-level logical data models for customer, location, product etc, such as Open Data Model are readily available and generally not extremely customized or different.

If we look at the MDM aspect of data modeling and requirements, I look at it as three layers:

1.the logical data model.

2.the sub models(reference models) which detail the localization in the sources for each domain, which in essence is our source-based reference models.

3.an associative model that allows for the nexus/mapping between the sources their domain values in the master domain model.

It doesn’t really get interesting until you’re able to dive into the submodel and associative models and relationships creation and understand the issues that you’re dealing with and anomalies in the source data, which inherently involves quite a bit of data profiling and metadata analysis.

In my experience the creation of the MDM model and sub models involves three simultaneous and parallel tracks.

1. Meet with users of and preform metric decomposition(defined in my slides) and define the (information value chain) and create logical definitions of metrics , groupings of dimensions and hire attributes and subsequently hierarchies that are required from an analysis perspective. A data dictionary.

1a. The business deliverable here is a business matrix which will show the relationship between the metrics and the dimensions in relation to the business processes and/or KPI’s. I’ll send an example.

1b. The relationship of the above deliverable to the logical model, is that the business deliverables here specifically the business matrix and the associated hierarchies will drive the core required data elements from the KPI and therefore the MDM perspective. In addition it will drive the necessary hierarchies( relationships) and thereby the associations that will be required at the next level down which will involve the source to target mapping required for loading the reference tables.

1c. Lastly and once we’ve truly understand the clients requirements with enough detail in terms of metric definition and hierarchies we can then select and incorporate reference models or industry experts and validating and vetting our model, this should be relatively straightforward.

1d. From an MDM perspective, where multiple teams are simultaneously gathering information/requirements we would want to use and have access to the artifacts especially for new business process modeling or any BI requirements modeling.

2. Introduce the metadata mart concept and utilize local techniques and published capabilities(I have several that can easily be incorporated) to instantiate a metadata mart and incorporate metadata and data profiling as part of the data modeling process and user awarement process.

2a. The deliverable here is a set of Excel pivot tables and/or reports that allow the users to analyze and understand the sources for domains and how they’re going to relate to the master data model even at logical level

3. Capture relations to defining master domains and documenting in Source to Target Matrixs the required mappings and transformations.

This process will result in a comprehensive set of deliverables that while in their final state will be the logical and physical data models for MDM, in addition the client will have the necessary data dictionary definitions as well as I high-level source to target mappings.

My concern with presenting a high-level logical MDM model to user is that it is intuitively too simplistic and to straightforward. Obviously at the high-level the MDM constructs look very logical for customer, location, products etc..

They don’t expose the complexity and frankly the magic(hard work) from an MDM perspective of the underlining and supporting source reference models and associative models that are really the heart and soul of the final model. The sooner and more immediate that we can engage the client an organized! facilitated, methodological process of simultaneously profiling and understanding their existing data and defining the final state a.k.a. the MDM model that they are looking for the better we’re going to be in managing long-term expectations.

There’s an old saying which I think specifically applies here especially in relation to users and expectations it is “They get what they inspect, not what they expect

I know that this went on a bit long, I apologize I certainly don’t mean to lecture, but I think the crux of the problem in a waterfall or traditional approach to creating an MDM model is overcome by following a more iterative or agile approach.

The reality is that the MDM model is the heart of the integration engine.

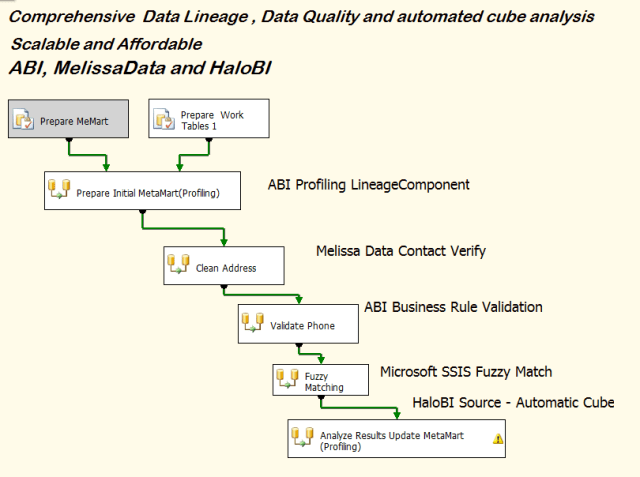

Microsoft BI Stack Leveraged with Vendor Add – In’s for Data Quality and Data Lineage.

Low cost strategic Business Analytics and Data Quality

This paper will outline the advantages of developing your complete Business Analytics solution within the Microsoft suite of Business Intelligence capabilities, including Microsoft SQL Server(SSIS, SSAS, SSRS and Microsoft Office).

This approach will yield and very powerful and detailed Analytical application focused on your business and it particular needs both data sources and business rules. In addition to a reporting and analytical solution, you will have insight and access to the data and business rule “Lineage” or DNA allowing you click on any anomalies in any dash board and not only drill into the detail, but also see how data was transformed and/or changes as it was process to create your dashboard, excel workbook or report.

Lineage as it applies to data in Business Intelligence provides the capability see track back where data came from. Essentially it supports “Metric Decomposition” which is a process for breaking down a metric (business calculation) into separate parts. With this capability you can determine your data’s ancestry or Data DNA.

Why does this matter? The key to Lower Cost of Ownership, that’s why.

An “Absolute Truth” in today’s environment (Business Discovery, Big Data, Self Service Whatever?) is that you never have enough detail and usually have to stop clicking before you have an answer to your question and look at another report of application. Just when you about to get to the “bottom of it” you run out of clicks As Donald Farmer (VP Product Management) pointed out recently we are hunters and that’s why we like search engines. We enter phrases for things were hunting, and the search engine presents large list which we then follow the “tracks” of what we ae looking for , if we go down a wrong path we back track and continue, continually getting closer to our answer. Search engines implement lineage thru “key words “ and there relationship to web pages, which intern link to other pages. This capability is now “heuristic” or common sense.

While the method of analyzing data is “Rule of Thumb” for internet searches, it is not in terms of analyzing your business reports.

If you were able to “link” all your data from it the original source to the final destination, then would you have the ability to “hunt” through your data the way you hunt with Google, Bing etc…

Lineage Example

Let apply this concept to customer records with phone numbers (phone1 column). First we clean the phone number (phone1Cleansed column). We keep both columns in our processing results. Then in addition we keep the name of the “Business Rule we used to clean it (phone1_Category), an indicator to identify valid or invalid phone numbers(phone1_Valid) and the actual column name used as a source(phone1_ColumnName). The “phone1_Rule” column is used to tell us which rule was used, the first row used only the Phone Parse rule to format the number, the second row used two rules, NumbersOnly tells us thet the number contains non numeric character and the Phone Parse rule formats the number with periods separating the area code, prefix and suffix.

The end result is when this is included in your reporting or analytical solution you know:

- Who: The process that applied the change, if you also log package name(Optional)

- What: The final corrected or standardized value(phone1Cleansed)

- Where: Which column originated the value.(phone1_ColumnName)

- When: The date and time of the change.(Optional)

- Why: Which rules were invoked to cause the transformation.(phone1_Category, phone1_Rule and phone1_Valid)

In addition if you then choose to implement data mining and/or “Predictive Analytics” already a part of the Microsoft BI Suite, you will be facing the typical pitfalls associated with trying to predict based on either “dirty data” or highly “scrubbed data”, lacking lineage.

I have assembled a collection of vendors product that when used in conjunction with the Microsoft BI suite can provide this capability at reasonable in line with your investment with Microsoft SQL Server.

If done manually this would require extensive additional coding, however with the tools we have selected, this can be accomplished automatically as well as automating the loading of an Analysis Services cube for Analytics.

Our solution brings together the Microsoft offerings of Actuality Business Intelligence, Melissa Data and HaloBI. This solution can be implemented by any moderately skilled Microsoft developer familiar with the BI Suite(SSIS and SSAS) and does not require any expensive niche ETL or Analytical software.

In the next post I will walk through a real world implementation.

Microsoft SSIS Package

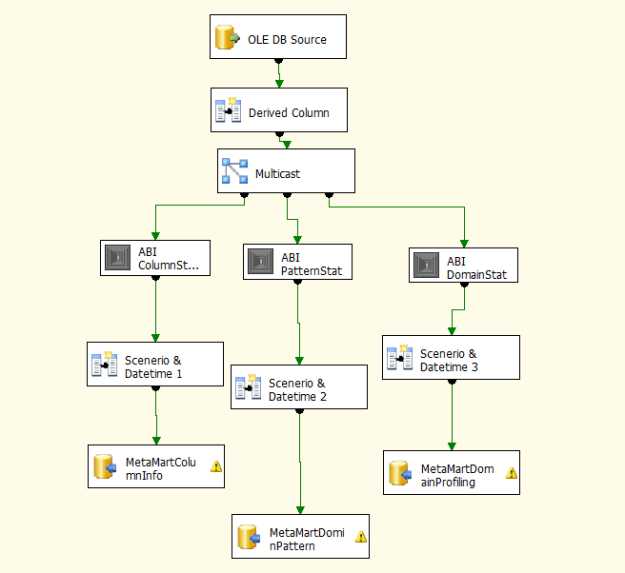

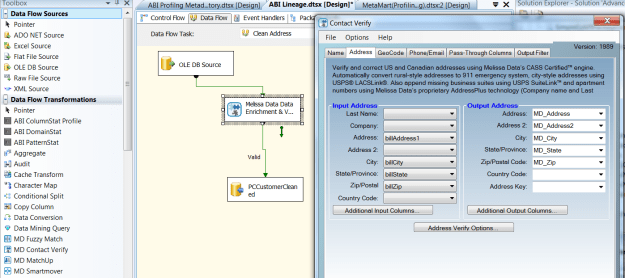

Data Lineage SSIS Example using ABI Profiling, Melissa Data , SSIS Fuzzy Groupng, SSAS cube genertion

Actuality Business Intelligence SSIS Profiling

Melissa Data Contact Verify

Awarement or Perception is Perception “Awareness is Reality” Part Duex

Recently our friend Andy Leonard had an interesting post. regarding his faith, changing priorities and achieving what I believe is “Awarement”, which I found inspirational and thought provoking.

“Awarement” is the established form of awareness. Once one has accomplished their sense of awareness they have come to terms with awarement.

As I have experienced many of us who are driven to love and provide for our families can get caught up in creating a “Perception” of ourselves to achieve greater income, normally through titles or recognition by our peers, and this can compete with our family time, due the “perception” it has higher priority.

As we gain success we achieve an Awarement of success and thereby a perception of success. however as times goes on and events overtake us our Awarement is changed due to our own self perception evolving, so the reality that was our perception is revised. “Perception is reality” I don’t think so.

My own transformation was few years back when Tessie and I faced death and through the grace of God moved past it.

Perception is Perception “Awareness is Reality”

If I might suggest I have also studied some of the The Gospel of Mary as found in the Berlin Gnostic Codex, and find them helpful.

excerpt – – – –

8) And she began to speak to them these words: I, she said, I saw the Lord in a vision and I said to Him, Lord I saw you today in a vision. He answered and said to me,

9) Blessed are you that you did not waver at the sight of Me. For where the mind is there is the treasure.

10) I said to Him, Lord, how does he who sees the vision see it, through the soul or through the spirit?

11) The Savior answered and said, He does not see through the soul nor through the spirit, but the mind that is between the two that is what sees the vision and it is […]

I have included some of Platos teachings and writings in my exploration and understanding of “Awarement” via the Allegory of the Cave

Ira

<;a

Last but no least I also find Yoda quite interesting.

"Do, or do not. There is no try."

"Karo yaa na karo, koshish jaisa kuch nahi hai."