Tag Archives: Big Data LinkedIn Science OLAP Agile SCRUM Extreme Scoping BI Data Quality Metadata Mart

Evolving Data Into Information through Lineage

Information lineage through data DNA

Ira Warren Whiteside

ORIGINS

COMMON SENSE

We in IT have complicated and diluted the concept and process of analyzing data and business metrics incredibly in the last few decades. We seem to be focusing on the word data.

“There is a subtle difference between data and information.”

Information vs data

There is a subtle difference between data and information. Data are the facts or details from which information is derived. Individual pieces of data are rarely useful alone. For data to become information, data needs to be put into context.

Examples of Data and Information

The history of temperature readings all over the world for the past 100 years is data.

If this data is organized and analyzed to find that global temperature is rising, then that is information.

The number of visitors to a website by country is an example of data.

Finding out that traffic from the U.S. is increasing while that from Australia is decreasing is meaningful information.

Often data is required to back up a claim or conclusion (information) derived or deduced from it.

For example, before a drug is approved by the FDA, the manufacturer must conduct clinical trials and present a lot of data to demonstrate that the drug is safe.

“Misleading” Data

Because data needs to be interpreted and analyzed, it is quite possible — indeed, very probable — that it will be interpreted incorrectly. When this leads to erroneous conclusions, it is said that the data are misleading. Often this is the result of incomplete data or a lack of context.

For example, your investment in a mutual fund may be up by 5% and you may conclude that the fund managers are doing a great job. However, this could be misleading if the major stock market indices are up by 12%. In this case, the fund has underperformed the market significantly.

Comparison chart

Synthesis: the combining of the constituent elements of separate material or abstract entities into a single or unified entity ( opposed to analysis, ) the separating of any material or abstract entity into its constituent elements.

Synthesis

Data into Information is dominant in terms of data movement and replication, in essence data logistics.

Lineage is the key.

And with the simple action of linking data file metadata names to a businesses glossary or terms, Will result in deeply insightful and informative business insight and analysis.

“Analysis the separating of any material or abstract entity into its constituent elements”

In order for a business manager for analysis you need to be able to start the analysis at a understandable business terminology.

And then provide the manager with the ability to decompose or break apart the result.

They are three essential set of capabilities and associated techniquestechniques for analysis and lineage.

- Data profiling and domain analysis as well as fuzzy matching components available on my blog https://irawarrenwhiteside.com/2014/04/13/creating-a-metadata-mart-via-tsql/

- Meta-data driven creation of a meta-data mart through code generation techniques, implemented.

Underlining each of these capabilities is a set of refined, developed and proven code says for accomplishing these basic fundamental task.

One case study

I have been in this business over 45 years and I’d like to offer one example of the power of the concept of a meta-data mart and lineage as it regards to business insight.

A lineage, information and data story for BCBS

I was called on Thursday and told to attend a meeting on Friday between our companies leadership and the new Chief Analytics Officer. He was prototypical of the new IT a “new school” IT Director.

I had been introduced via LinkedIn to this director a week earlier as he had followed one of my blogs on metadata marts and lineage.

After a brief introduction, our leadership began to speak and the director immediately held up his hand he said “Please don’t say anything right now the profiling you provided me is at the kindergarten level and you are dishonest”

The project was a 20 week $900,000 effort and we were in week 10.

The company has desired to do a proof of concept and better understand the use of the informatics a tool DQ as well as direction for a data governance program.

To date what had been accomplished was in a cumulation of hours of effort in billing that has not resulted in any tangible deliverable.

The project had focused on the implementation and functionally of the popular vendor tool, canned data profiling results and not providing information to the business.

The director commented on my blog post and asked if we could achieve that at his company, I of course said yes.

Immediately I proposed we use the methodology that would allow us to focus on a tops down process of understanding critical business metrics and a bottoms up process of linking data to business terms.

My basic premise was that unless your deliverable from a data quality project can provide you business insight from the top down it is of little value. In essence you’ll spend $900,000 to tell a business executive they have dirty data. At which point he will say to you “so what’s new”.

The next step was to use the business terminology glossary that existed in informatica metadata manager and map those terms to source data columns and source systems, not an extremely difficult exercise. However this is the critical step in providing a business manager the understanding and context of data statistics.

The next step, was the crucial step in which we made a slight modification to the IDQ tool and allowed the storing of the profiling results into a meta-data mart and the association of a business dimension from the business glossary the reporting statistics.

We were able to populate my predefined metadata mart dimensional model by using the tool the company and already purchased.

Lastly by using a dimensional model we were able to allow the business to apply their current reporting tool.

Upon realizing the issues they faced in their business metrics, they accelerated the data governance program and canceled the data lake until a future date.

Now for the results.

Within six weeks we provided an executive dashboard based on a meta-data mart that allowed the business to reassess their plans involving governance and a data lake.

Here are some of the results of their ability to analyze their basic data statistics but mapped to their business terminology.

- 6000 in properly form SS cents

- 35,000 dependence of subscribers over 35 years old

- Thousands of charges to PPO plans out of the counties they were restricted to.

- There were mysterious double counts in patient eligibility counts, managers were now able to drill into those accounts by source system and find that a simple Syncsort utility had been used improperly and duplicated records.

J

Merry Christmas Data Classification, Feature Engineering , Data Governance. ‘How to’ do it and some code take a look

I was heavily involved in business intelligence, data warehousing and data governance as of several years ago and recently have had many chaotic personal challenges, upon returning to professional practice I have discovered things have not changed that much in 10 yearsagovernance The methodologies and approaches are still relatively consistent however the tools and techniques have changed and In my opinion not for the better, without focusing on specific tools I’ve observed that the core to data or MDM is enabling and providing a capability for classifying data into business categories or nomenclature.. and it has really not improved.

- This basic traditional approach has not changed, in essence man AI model predicst a Metric and is wholly based on the integrity of its features or Dimensions.

Therefore I decided, to update some of the techniques and code patterns, I’ve used in the past regarding the information value chain and or record linkage , and we are going to make the results available with associated business and code examples initially with SQL Server and data bricks plus python

My good friend, Jordan Martz of DataMartz fame has greatly contrinuted to this old mans BigData enlightenment as well as Craig Campbell in updating some of the basic classification capabilities required and critical for data governance. If you would like a more detailed version of the source as well as the test data, please send me an email at iwhiteside@msn.com. Stay tuned for more update and soon we will add Neural Network capability for additional automation of “Governance Type” automated classification and confidence monitoring.

Before we focus on functionality let’s focus on methodology

Initially understand key metrics to be measured/KPI‘s their formulas and of course teh businesse’s expectation of their calculations

Immediately gather file sources and complete profiling as specified in my original article found here

Implementing the processes in my meta-data mart article would provide numerous statistics regarding integers or float field however there are some special considerations for text fields or smart codes

Before beginning classification you would employ similarity matching or fuzzy matching as described here

As I said I posted the code for this process on SQL Server Central 10 years ago here is s Python Version.

databricks-logo Roll You Own – Python Jaro_Winkler(Python)

databricks-logoroll You Own – Python Jaro_Winkler(Python)

Import Notebook

Step 1a – import pandas

import pandas

Step 2 – Import Libraries

libraries from pyspark.sql.functions import input_file_name

from pyspark.sql.types import *

import datetime, time, re, os, pandas

ML Libraires

from pyspark.ml.feature import RegexTokenizer, StopWordsRemover, NGram, HashingTF, IDF, Word2Vec, Normalizer, Imputer, VectorAssembler

from pyspark.ml import Pipeline

import mlflow

from mlflow.tracking import MLFlowClient

from sklearn.cluster import KMeans

import numpy as np

Step 3 – Test JaroWinkler

JaroWinkler(‘TRAC’,’TRACE’)

Out[5]: 0.933333

Step 4a =Implement JaroWinkler(Fuzzy Matching)

%python

def JaroWinkler(str1_in, str2_in):

if(str1_in is None or str2_in is None):

return 0

tr=0

common=0

jaro_value=0

len_str1=len(str1_in)

len_str2=len(str2_in)

column_names=[‘FID’,’FStatus’]

df_temp_table1 = pandas.DataFrame(columns=column_names)

df_temp_table2 = pandas.DataFrame(columns=column_names)

#clean_string(str1_in)

#clean_string(str2_in)

if len_str1 > len_str2:

swap_len=len_str2

len_str2=len_str1

len_str1=swap_len

swap_str=str1_in

str1_in=str2_in

str2_in=swap_str

max_len=len_str2

iCounter=1

while(iCounter <= len_str1):

df=pandas.DataFrame([[iCounter,0]], columns=column_names)

df_temp_table1=pandas.concat([df_temp_table1,df], ignore_index=True)

iCounter=iCounter+1

iCounter=1

while (iCounter <= len_str2):

df=pandas.DataFrame([[iCounter,0]], columns=column_names)

df_temp_table2=pandas.concat([df_temp_table2,df], ignore_index=True)

iCounter=iCounter+1

iCounter=1

m=round((max_len/2)-1,0)

i=1

while(i <= len_str1):

a1=str1_in[i-1]

if m >= i:

f=1

z=i+m

else:

f=i-m

z=i+m

if z > max_len:

z=max_len

while (f <= z):

a2=str2_in[int(f-1)]

if(a2==a1 and df_temp_table2.loc[f-1].at['FStatus']==0):

common = common + 1

df_temp_table1.at[i-1,'FStatus']=1

df_temp_table2.at[f-1,'FStatus']=1

break

f=f+1

i=i+1

i=1

z=1

while(i <= len_str1): v1Status=df_temp_table1.loc[i-1].at[‘FStatus’] if(v1Status==1): while(z <= len_str2): v2Status=df_temp_table2.loc[z-1].at[‘FStatus’] if(v2Status==1): a1=str1_in[i-1] a2=str2_in[z-1] z=z+1 if(a1 != a2 ): tr = tr+0.5 break break i=i+1 wcd = 1.0/3.0 wrd = 1.0/3.0 wtr = 1.0/3.0 if (common != 0): jaro_value = (wcd * common)/ len_str1 + (wrd * common) / len_str2 + (wtr * (common – tr)) / common return round(jaro_value,6) Step 4b – Register JaroWinkler spark.udf.register(“JaroWinkler”, JaroWinkler) Out[6]:

Step8a – Bridge vs Master vs AssociativeALL

%sql

DROP TABLE IF EXISTS NameAssociative;

CREATE TABLE NameAssociative;

SELECT

Name

,NameInput

,sha2(replace ( NameLookput,’%[^a-Z0-9, ]%’,’ ‘) , 256) as NameLookupCeaned ,a.NameLookupKey

,sha2(replace( NameInput,’%[^a-Z0-9, ]%’,’ ‘) , 256) as NameInput,b.NameInputKey

,JaroWinkler(a.NameLookup, b.NameInput) MatchScor

,RANK() OVER (Partition by a.DetailedBUMaster ORDER BY JaroWinkler(a.NameLookupCleande, b.NameInputCleaned) DESC) NameLookup,b.NameLookupKey

FROM NameInput as a

CROSS JOIN NameLookup as b

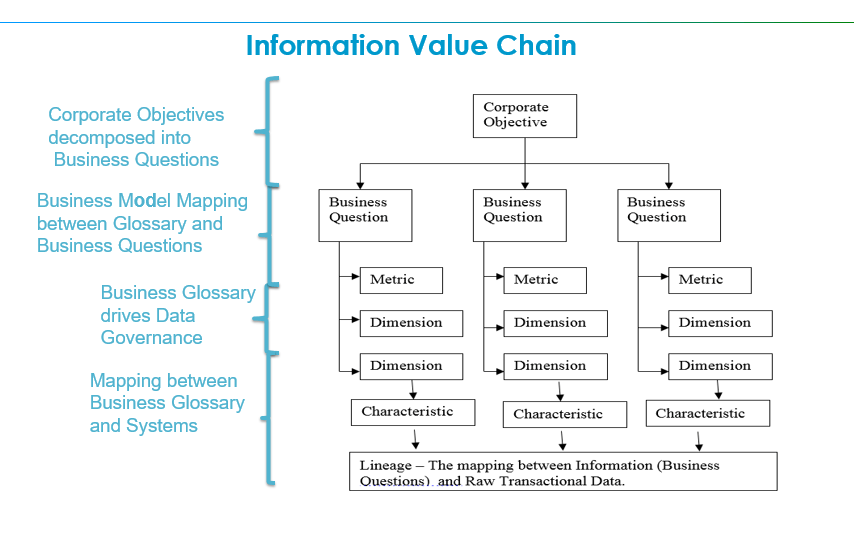

Navigating the Information Value Chain

Data Governance

Navigating the Information Value Chain

Demystifying the path forward

The challenge for businesses is to seek answers to questions, they do this with Metrics (KPI’s) and know the relationships of the data, organized by logical categories(dimensions) that make up the result or answer to the question. This is what constitutes the Information Value Chain

Navigation

Let’s assume that you have a business problem, a business question that needs answers and you need to know the details of the data related to the business question.

Information Value Chain

Information Value Chain

• Business is based on Concepts.

• People thinks in terms of Concepts.

• Concepts come from Knowledge.

• Knowledge comes from Information.

• Information comes from Formulas.

• Formulas determine Information relationships based on quantities.

• Quantities come from Data.

• Data physically exist.

In today’s fast-paced high-tech business world this basic navigation (drill thru) business concept is fundamental and seems to be overlooked, in the zeal to embrace modern technology

In our quest to embrace fresh technological capabilities, a business must realize you can only truly discover new insights when you can validate them against your business model or your businesses Information Value Chain, that is currently creating your information or results.

Today data needs to be deciphered into information in order to apply formulas to determine relationships and validate concepts, in real time.

We are inundated with technical innovations and concepts it’s important to note that business is driving these changes not necessarily technology

Business is constantly striving for a better insights, better information and increased automation as well as the lower cost while doing these things several of these were examined and John Thuma’s‘ latest article

Historically though these changes were few and far between however innovation in hardware storage(technology) as well as software and compute innovations have led to a rapid unveiling of newer concepts as well as new technologies

Demystifying the path forward.

In this article we’re going to review the basic principles of information governance required for a business measure their performance. As well as explore some of the connections to some of these new technological concepts for lowering cost

To a large degree I think we’re going to find that why we do things has not changed significantly it’s just how, we now have different ways to do them.

It’s important while embracing new technology to keep in mind that some of the basic concepts, ideas, goals on how to properly structure and run a business have not changed even though many more insights and much more information and data is now available.

My point is in the implementing these technological advances could be worthless to the business and maybe even destructive, unless they are associated with a actual set of Business Information Goals(Measurements KPI’s) and they are linked directly with understandable Business deliverables.

And moreover prior to even considering or engaging a data scienkce or attempt data mining you should organize your datasets capturing the relationships and apply a “scoring” or “ranking” process and be able to relate them to your business information model or Information Value Chain, with the concept of quality applied real time.

The foundation for a business to navigate their Information Value Chain is an underlying Information Architecture. An Information Architecture typically, involves a model or concept of information that is used and applied to activities which require explicit details of complex information systems.

Subsequently a data management and databases are required, they form the foundation of your Information Value Chain, to bring this back to the Business Goal. Let’s take a quick look at the difference between relational database technology and graph technology as a part of emerging big data capabilities.

However, considering the timeframe for database technology evolution, has is introduced a cultural aspect of implementing new technology changes, basically resistance to change. Business that are running there current operations with technology and people form the 80s and 90s have a different perception of a solution then folks from the 2000s.

Therefore, in this case regarding a technical solution “perception is not reality”, awarement is. Business need to find ways to bridge the knowledge gap and increase awarement that simply embracing new technology will not fundamentally change the why a business is operates , however it will affect how.

Relational databases were introduced in 1970, and graph database technology was introduced in the mid to 2000

There are many topics included in the current Big Data concept to analyze, however the foundation is the Information Architecture, and the databases utilized to implement it.

There were some other advancements in database technology in between also however let’s focus on these two

History

1970

In a 1970s relational database, Based on mathematical Set theory, you could pre-define the relationship of tabular (tables) , implement them in a hardened structure, then query them by manually joining the tables thru physically naming attributes and gain much better insight than previous database technology however if you needed a new relationship it would require manual effort and then migration of old to new , In addition your answer it was only good as the hard coding query created

2020

In mid-2000’s the graph database was introduced , based on graph theory, that defines the relationships as tuples containing nodes and edges. Graphs represent things and relationships events describes connections between things, which makes it an ideal fit for a navigating relationship. Unlike conventional table-oriented databases, graph databases (for example Neo4J, Neptune) represent entities and relationships between them. New relationships can be discovered and added easily and without migration, basically much less manual effort.

Nodes and Edges

Graphs are made up of ‘nodes’ and ‘edges’. A node represents a ‘thing’ and an edge represents a connection between two ‘things’. The ‘thing’ in question might be a tangible object, such as an instance of an article, or a concept such as a subject area. A node can have properties (e.g. title, publication date). An edge can have a type, for example to indicate what kind of relationship the edge represents.

Takeaway.

The takeaway there are many spokes on the cultural wheel, in a business today, encompassing business acumen, technology acumen and information relationships and raw data knowledge and while they are all equally critical to success, the absolute critical step is that the logical business model defined as the Information Value Chain is maintained and enhanced.

It is a given that all business desire to lower cost and gain insight into information, it is imperative that a business maintain and improve their ability to provide accurate information that can be audited and traceable and navigate the Information Value Chain,Data Science can only be achieved after a business fully understand their existing Information Architecture and strive to maintain it.

Note as I stated above an Information Architecture is not your Enterprise Architecture. Information architecture is the structural design of shared information environments; the art and science of organizing and labelling websites, intranets, online communities and software to support usability and findability; and an emerging community of practice focused on bringing principles of design, architecture and information science to the digital landscape. Typically, it involves a model or concept of information that is used and applied to activities which require explicit details of complex information systems.

In essence, a business needs a Rosetta stone in order translate past, current and future results.

In future articles we’re going to explore and dive into how these new technologies can be utilized and more importantly how they relate to Business outcomes

Actuality Business Intelligence – the path to Enlightenment

Coming soon. . .

Organic Data Quality vs Machine Data Quality

In reading a few recent blog post on centralized data quality vs. distributed data quality have provoked me to offer another point of view.

Organic distributed data quality provides todays awareness for all current source analysis and problem resolution and they are accomplished manually by individuals in most cases.

In many cases when management or leadership(Folks worried about their jobs) are presented with any type of organized machine based data quality results that can easily be viewed, understood and is permanently available, the usual result is to kill or discredit the messenger.

Automated “Data Quality Scoring” (Profiling + Metadata Mart) brings them from a general “awareness” to a realized state “awarement”.

Awarement is the established form of awareness. Once one has accomplished their sense of awareness they have come to terms with awarement.

It’s one thing to know that alcohol can get you drunk, it quite another to be aware that you are drunk when you are drunk.

Perception = Perception

Awarement = Reality

Departmentral DQ = Awareness

vs

Centralized DQ = Awarement

Share the love… of Data Quality– A distributed approach to Data Quality may result in better data outcomes

Metadata Mart the Road to Data Governance

Implementing a Metadata Mart the Road to Data Governance best viewed in PRESENTATION Mode, there is animation.

This presentation has narrative, play in presentation mode with sound on.

Self Service Semantic BI (Business Intelligence) Concept

We want to build an Enterprise Analytical capability by integrating the concepts for building a Metadata Mart with the facilities for the Semantic Web

We want to build an Enterprise Analytical capability by integrating the concepts for building a Metadata Mart with the facilities for the Semantic Web

- Metadata Mart Source

- (Metadata Mart as is) Source Profiling(Column, Domain & Relationship)

- +

- (Metadata Mart Plus Vocabulary(Metadata Vocabulary)) Stored as Triples(subject-predicate-object) (SSIS Text Mining)

- +

- (Metadata Mart Plus)Create Metadata Vocabulary following RDFa applied to Metadata Mart Triple(SSIS Text Mining+ Fuzzy (SPARGL maybe))

- +

- Bridge to RDFa – JSON-LD via Schema.org

- Master data Vocabulary with lineage (Metadata Vocabulary + Master Vocabulary) mapped to MetaContent Statements)) based on person.schema.org

- Creates link to legacy data in data warehouse

- +RDFa applied to web pages

- +JSON-LD applied to

- + any Triples from any source

- Semantic Self Service BI

- Metadata Mart Source + Bridge to RDFa

I have spent some time in this for quite a while now and I believe there is a quite a bit of merit in approaching the collection of domain data and column profile data, in regards to the meta-data mart, and organize them in a triple’s fashion

The basis for JSON-LD and RDFa is the collection of data as a triple. Delving into said deeper

I believe with the proper mapping for the object reference and deriving of the appropriate predicates in the collection of the value we could gain some of the same benefits as well as bringing the web data being “collected, there by linking to source data.

Consider the following excerpt regarding Vocabularies derived from MetaContent via Metadata Structure

“Metadata structures[edit]

Metadata (metacontent), or more correctly, the vocabularies used to assemble metadata (metacontent) statements, are typically structured according to a standardized concept using a well-defined metadata scheme, including: metadata standards and metadata models. Tools such as controlled vocabularies, taxonomies, thesauri, data dictionaries, and metadata registries can be used to apply further standardization to the metadata. Structural metadata commonality is also of paramount importance in data model development and in database design.

Metadata syntax[edit]

Metadata (metacontent) syntax refers to the rules created to structure the fields or elements of metadata (metacontent).[11] A single metadata scheme may be expressed in a number of different markup or programming languages, each of which requires a different syntax. For example, Dublin Core may be expressed in plain text, HTML, XML, and RDF.[12]

A common example of (guide) metacontent is the bibliographic classification, the subject, the Dewey Decimal class number. There is always an implied statement in any “classification” of some object. To classify an object as, for example, Dewey class number 514 (Topology) (i.e. books having the number 514 on their spine) the implied statement is: “<book><subject heading><514>. This is a subject-predicate-object triple, or more importantly, a class-attribute-value triple. The first two elements of the triple (class, attribute) are pieces of some structural metadata having a defined semantic. The third element is a value, preferably from some controlled vocabulary, some reference (master) data. The combination of the metadata and master data elements results in a statement which is a metacontent statement i.e. “metacontent = metadata + master data”. All these elements can be thought of as “vocabulary”. Both metadata and master data are vocabularies which can be assembled into metacontent statements. “

The MetadataMart serve as the source for both metadata vocabulary and MDM for the Master Data Vocabulary.

For the Master Data Vocabulary consider schema.org which defines most of the schemas we need. Consider the following schema.org Persons Properties of Objects and Predicates:

A person (alive, dead, undead, or fictional).

| Property | Expected Type | Description |

| Properties from Person | ||

| additionalName | Text | An additional name for a Person, can be used for a middle name. |

| address | PostalAddress | Physical address of the item. |

The key is to link source data in the Enterprise via a Business Vocabulary from MDM to the Source Data Metadata Vocabulary from a Metadata Mart to conform the triples collected internally and externally.

In essence information from the web applications can be integrated with the dimensional metadata mart, MDM Model and existing Data Warehouses providing lineage for selected raw data from web to Enterprise conformed Dimensions that have gone thru Data Quality processes.

Please let me know your thoughts.

MAD Skills: New Analysis Practices for Big Data

I have had my first exposure to MAD Skills: New Analysis Practices for Big Data , very interesting approach , in many ways an extreme expansion of the concept for operational analytics via OLAP. The use of the “Sandbox” environment for statisticians and analyst, can also be incorporated in current BI applications using the Agile / SCRUM Approach modded with Extreme Scoping for BI(Larissa Moss).

I certainly see the advantage of enabling “deep” access to high end users , however the issues for broader use are still “Data Prep” and resolving Data Quality issues, as discussed in another thread in the Data Science LinkedIn group, also in the article the term “Data Driven” is used in the context of the “Functional” being developed, as opposed to automated reusable processes “Driven” by metadata.

Actually the entire approach seems to be geared towards being able to run one off processes and could be well served by a discussion of applying a Metdata Mart approach (Belcher Gartner) to retaining and reusing results. Also visit the Data Science Group at LinkedIN